Semantic and Episodic Network for Long-Form Video Understanding

A novel method that simulates episodic memory accumulation to capture action sequences and reinforces them with semantic knowledge dispersed throughout the video.

Paper / Code

Abstract

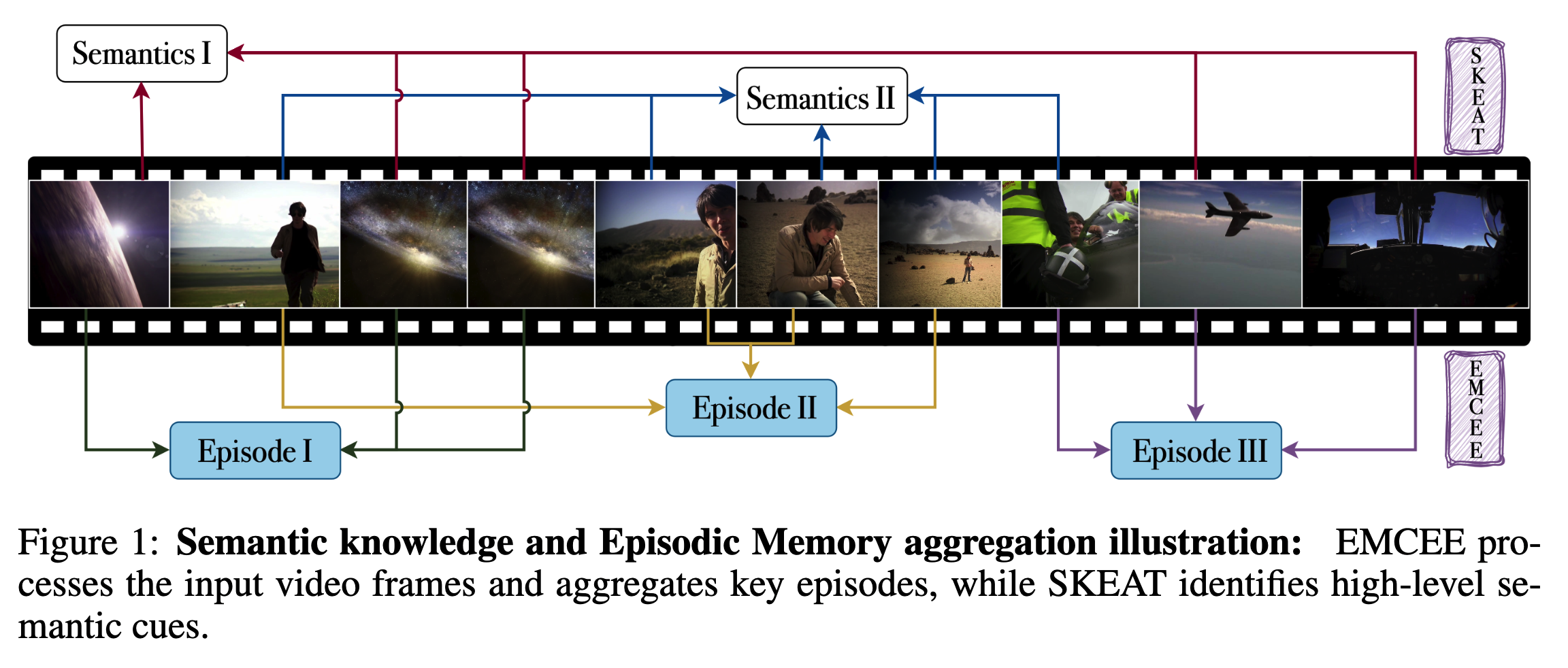

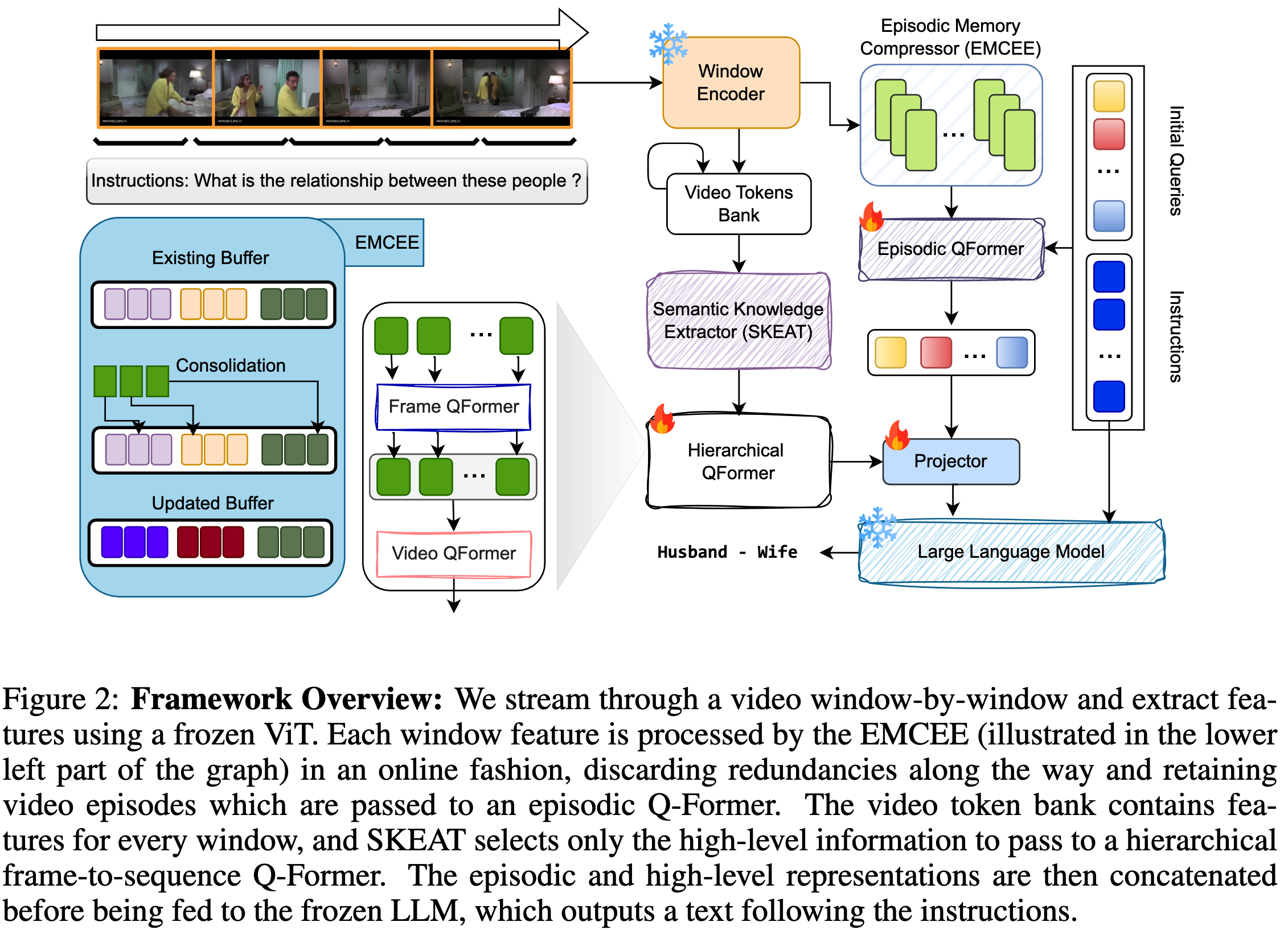

While existing research often treats long-form videos as extended short videos, we propose a novel approach that more accurately reflects human cognition. This paper introduces SCENE: SemantiC and Episodic NEtwork for Long-Form Video Understanding, a model that simulates episodic memory accumulation to capture action sequences and reinforces them with semantic knowledge dispersed throughout the video. Our work makes two key contributions: First, we develop an Episodic Memory ComprEssor (EMCEE) module that efficiently aggregates crucial representations from micro to semi-macro levels. Second, we propose a Semantic Knowledge ExtrAcTor (SKEAT) that enhances these aggregated representations with semantic information by focusing on the broader context, dramatically reducing feature dimensionality while preserving relevant macro-level information. Extensive experiments demonstrate that SCENE achieves state-of-the-art performance across multiple long-video understanding benchmarks in both zero-shot and fully-supervised settings. Our code will be made public at SCENE.

Main Contributions

Our key contributions are as follows:

- We propose an Episodic Memory ComprEssor (EMCEE) to stream through the video and keep important episodes by aggregating similar scenes. EMCEE enables efficient processing of extended video sequences while preserving temporal coherence and narrative structure.

- We develop a Semantic Knowledge Extractor (SKEAT) that enhances the model’s understanding of long videos by distilling high-level semantic cues, providing a cohesive framework for understanding the context and themes within long-form videos.

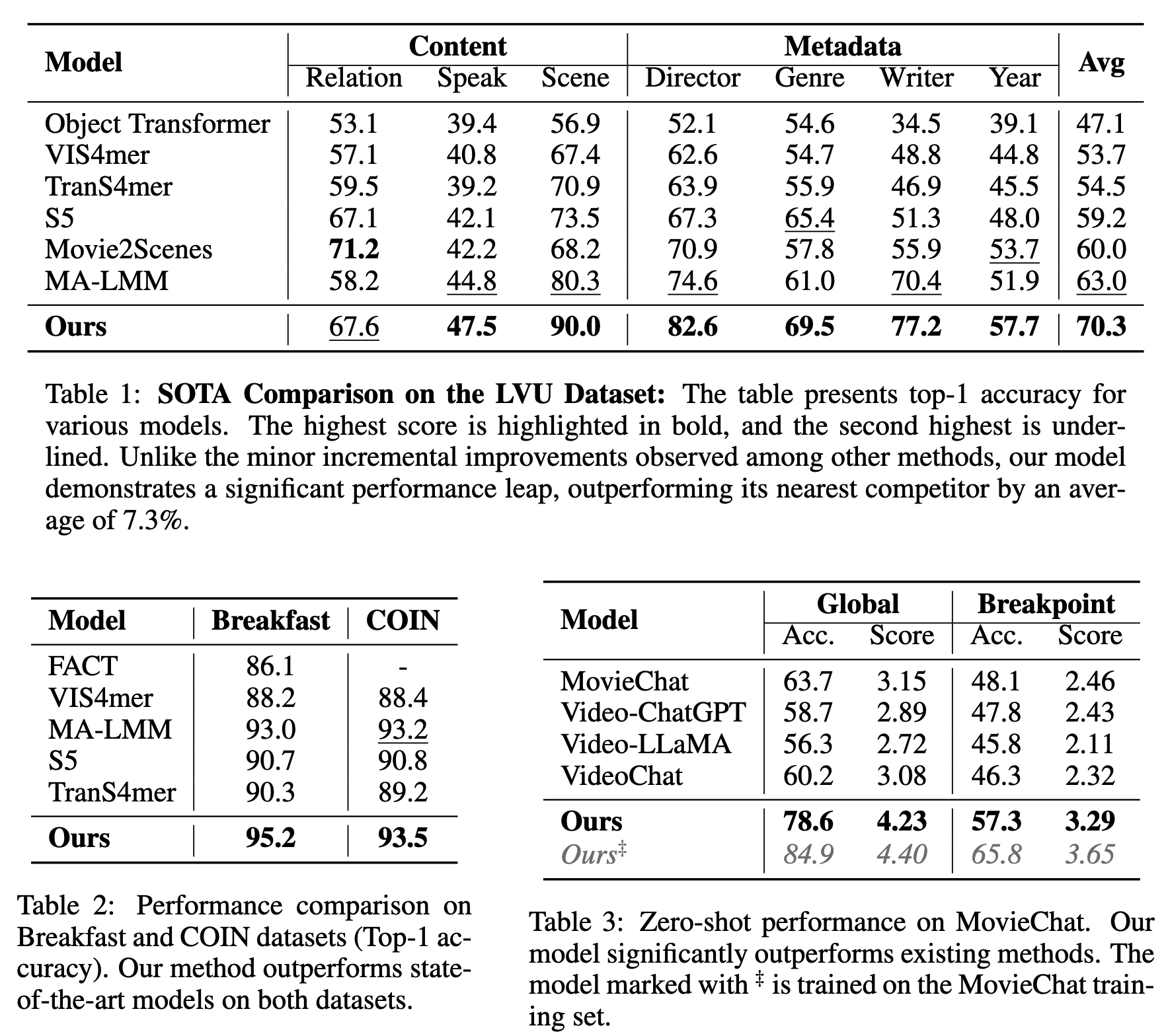

Results and Ablations