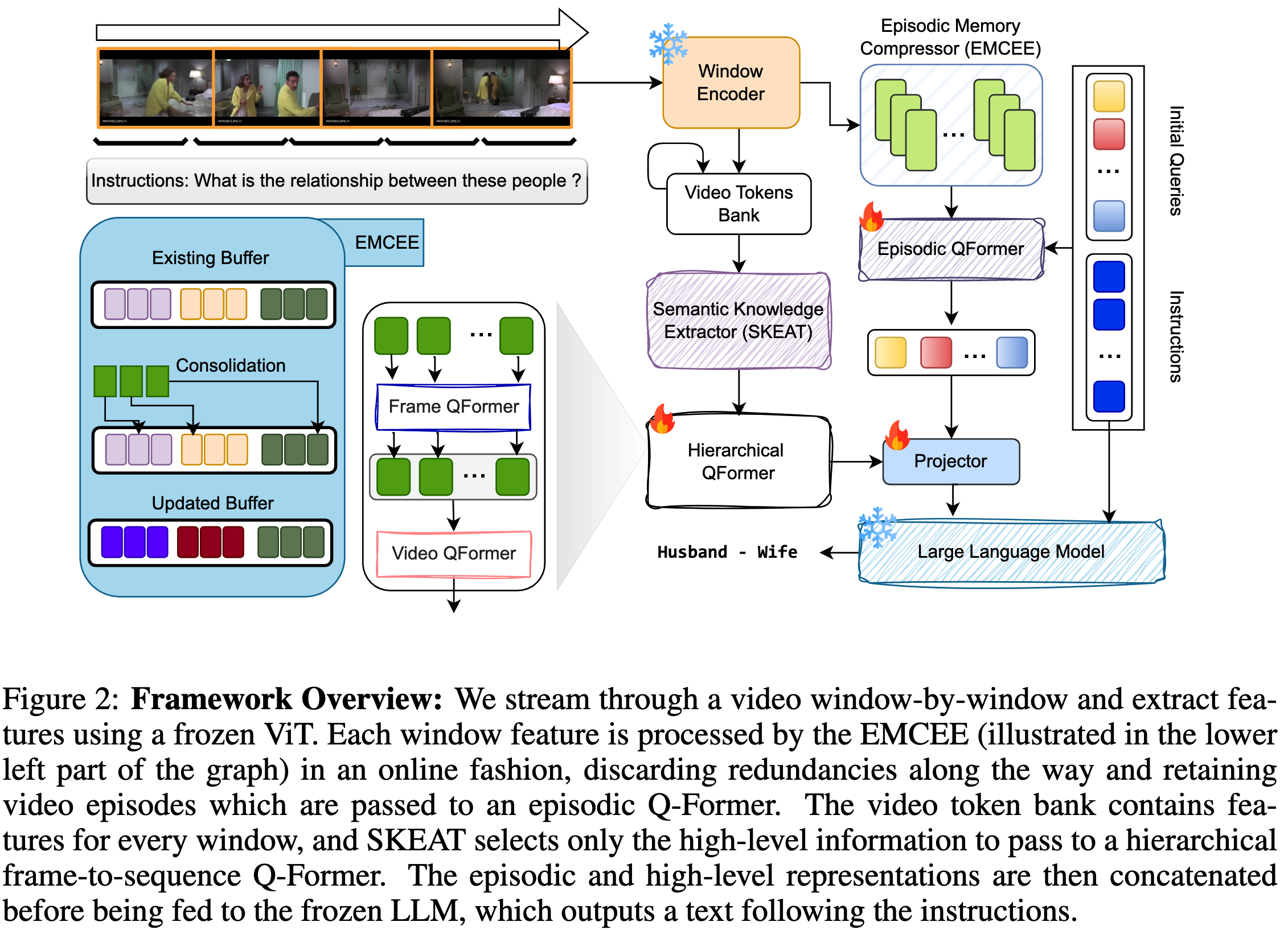

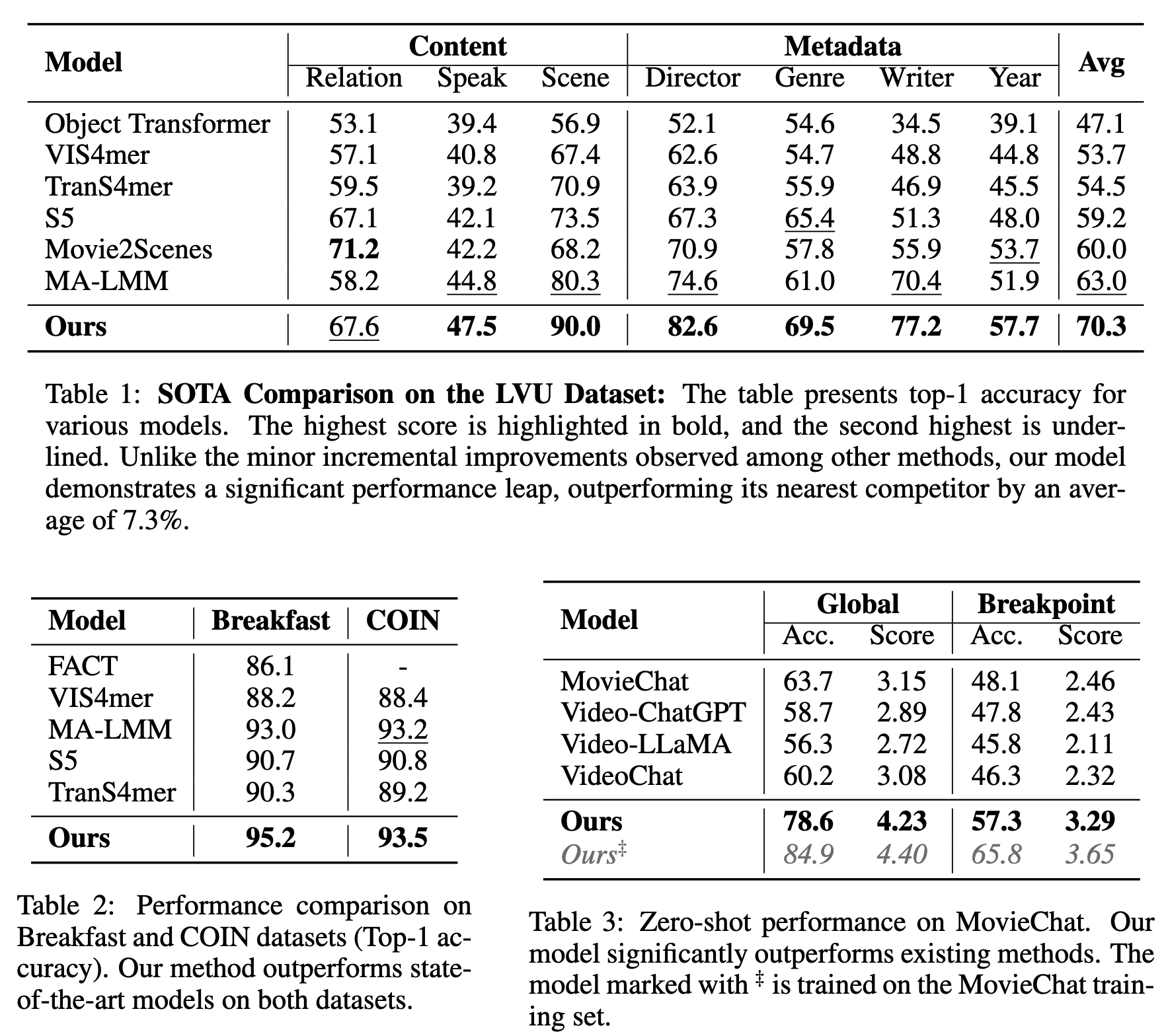

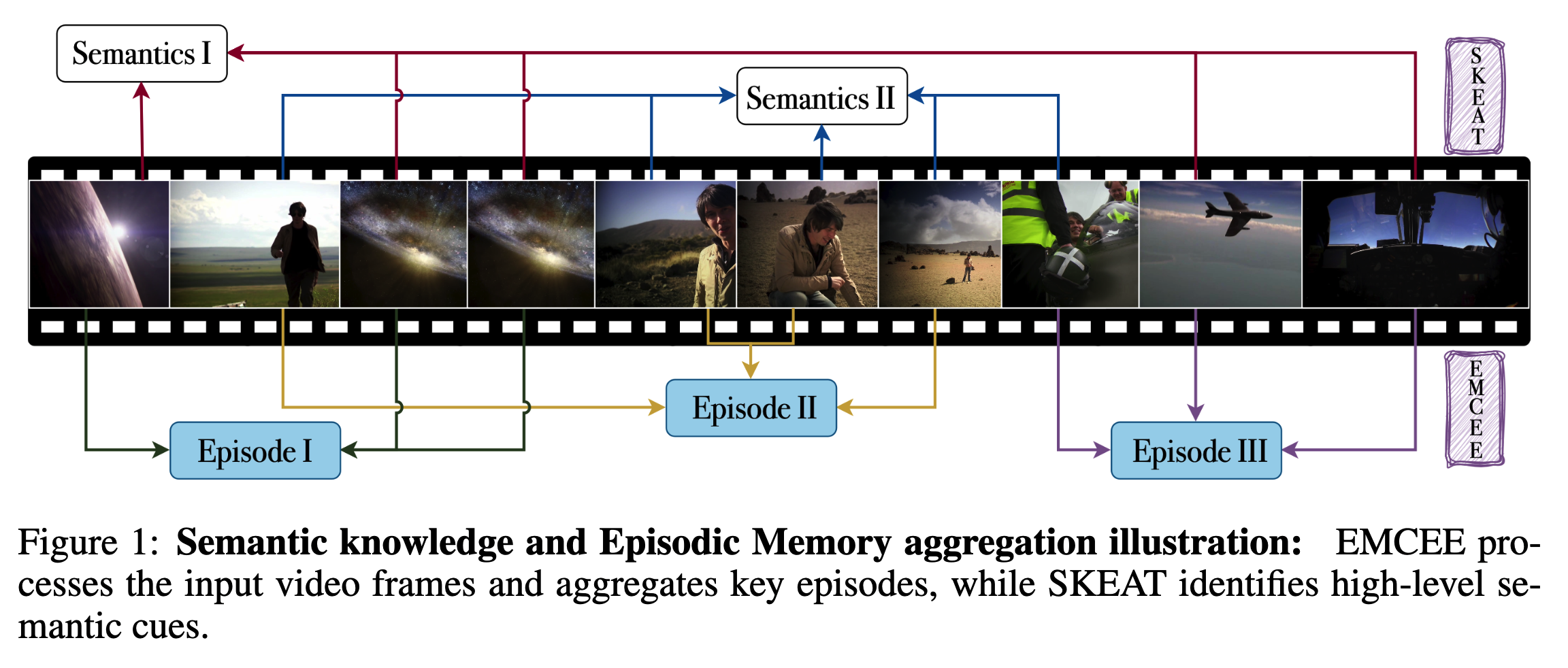

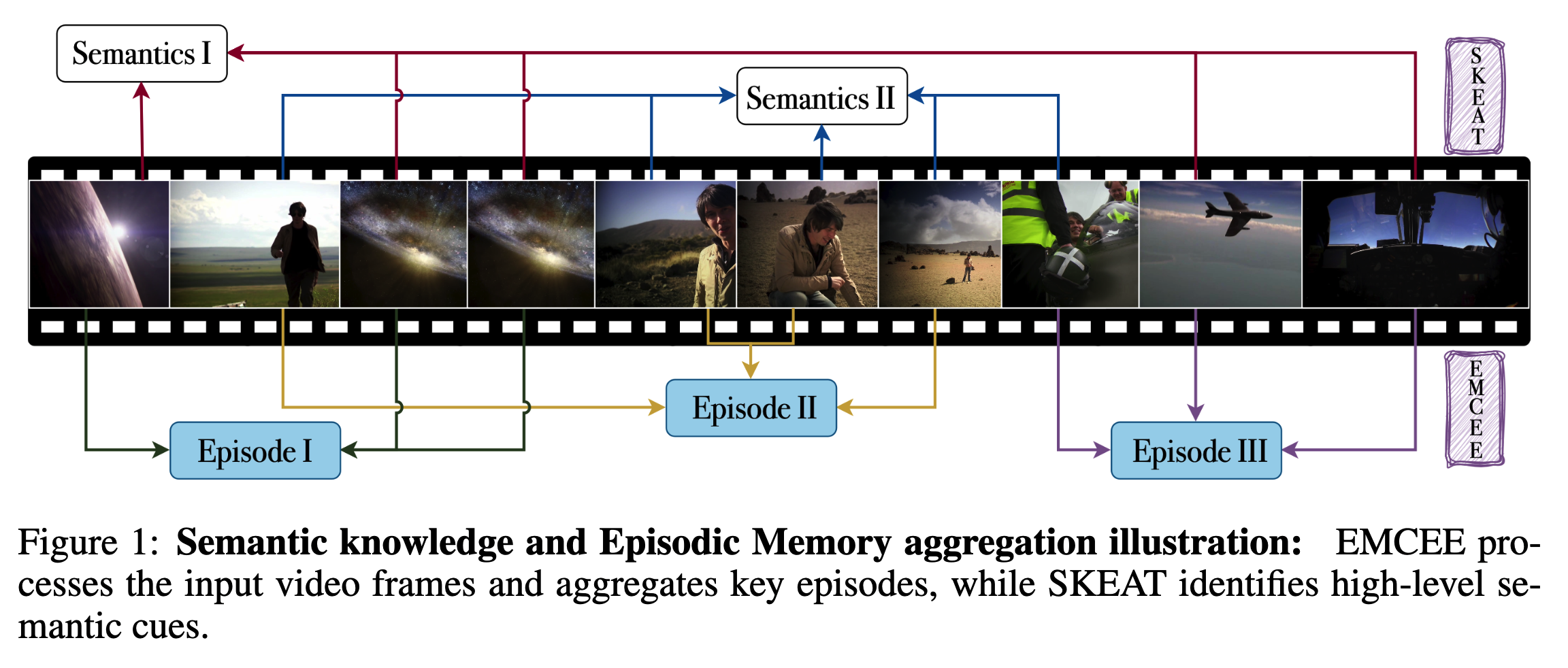

While existing research often treats long-form videos as extended short videos, we propose a novel approach that more accurately reflects human cognition. This paper introduces SCENE: SemantiC and Episodic NEtwork for Long-Form Video Understanding, a model that simulates episodic memory accumulation to capture action sequences and reinforces them with semantic knowledge dispersed throughout the video. Our work makes two key contributions: First, we develop an Episodic Memory ComprEssor (EMCEE) module that efficiently aggregates crucial representations from micro to semi-macro levels. Second, we propose a Semantic Knowledge ExtrAcTor (SKEAT) that enhances these aggregated representations with semantic information by focusing on the broader context, dramatically reducing feature dimensionality while preserving relevant macro-level information. Extensive experiments demonstrate that SCENE achieves state-of-the-art performance across multiple long-video understanding benchmarks in both zero-shot and fully-supervised settings. Our code will be made public at SCENE.

Our key contributions are as follows: